Introduction

In the world of stock trading and wealth management, we are always looking for the “next big thing”—that one asset that will provide consistent returns regardless of market volatility. While the stock market has its ups and downs, there is one investment that has shown a continuous “Bull Run” for over a decade: Human Capital in Technology. Specifically, the shift toward automation and cloud-native engineering has made the Certified DevOps Professional (CDP) one of the most valuable “blue-chip” assets an individual can possess.

When you invest in a CDP, you are not just learning a set of tools; you are acquiring a methodology that increases the “Digital Velocity” of an entire organization. In a competitive economy, the companies that win are the ones that can release software faster and more reliably than their peers. By becoming the architect of that speed, you position yourself in the highest earning bracket of the tech industry. This isn’t just a job upgrade; it is a strategic move to ensure your professional portfolio remains profitable even during economic downturns.

Strategic Certification Overview

| Track | Level | Who it’s for | Prerequisites | Skills Covered | Recommended Order | Official Link |

| DevOps | Professional | Engineers, Architects, & Tech Leads | Linux & Scripting Basics | CI/CD, K8s, Terraform, Cloud ROI | Core Foundation | CDP |

Why Choose DevOpsSchool for Your Career Growth?

Choosing the right mentor is like choosing the right stockbroker; it determines the quality of your returns. DevOpsSchool has established itself as a premier institution because it focuses on “Production-Ready” skills rather than just theory.

- Mentorship from Industry Veterans: You aren’t just learning from teachers; you are learning from engineers who have spent 20+ years managing massive infrastructures. This “tribal knowledge” is what helps you navigate complex real-world challenges.

- A Focus on ROI (Return on Investment): The curriculum is designed to target the exact tools and methodologies that are currently in high demand by top-tier global firms.

- Hands-on Cloud Labs: You get access to actual cloud environments where you can build, break, and fix systems. This practical experience is what gives you the confidence to lead a team.

- Comprehensive Career Support: From specialized “Interview Kits” to resume building tailored for the DevOps market, they ensure your transition into a new role is as smooth as possible.

- Lifetime Learning Access: In tech, the “market” changes every six months. DevOpsSchool provides lifetime access to their updated LMS, ensuring your skills never depreciate.

Certification Deep-Dive: Certified DevOps Professional (CDP)

What is this certification?

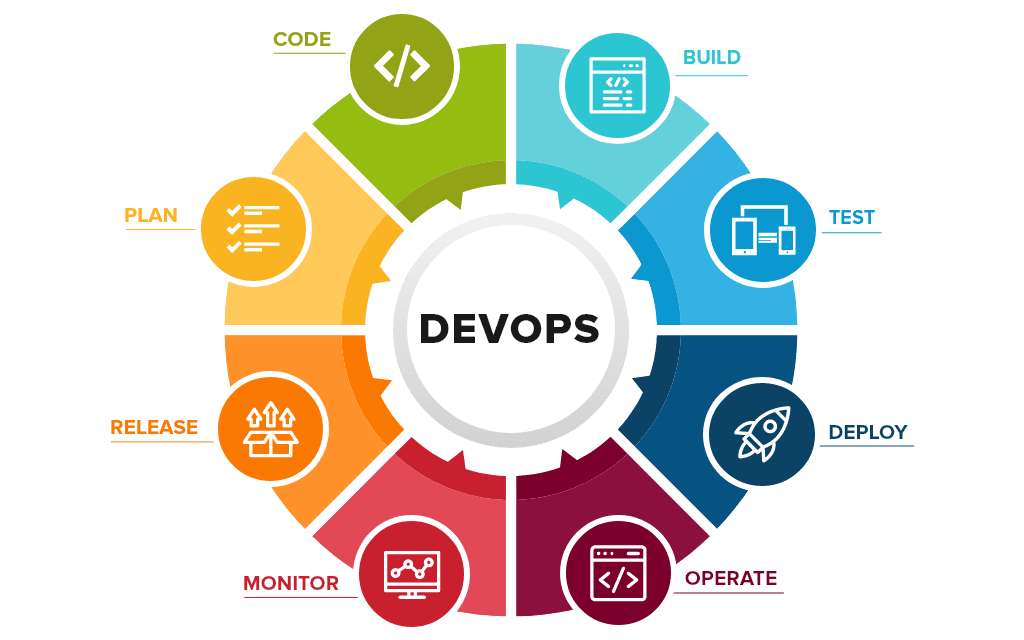

The Certified DevOps Professional (CDP) is a high-level validation of your ability to manage the modern “Software Supply Chain.” It covers the entire journey of code—from the moment a developer writes a line to the moment it serves a customer in a global production environment. It is the definitive credential for those who want to be seen as “System Architects” rather than just technicians.

Who should take this certification?

This track is designed for the “Growth-Oriented” professional. It is ideal for Software Developers who want to take control of their own release cycles and for System Administrators who want to pivot into the high-paying world of Site Reliability Engineering (SRE). It is also a critical requirement for Engineering Managers who need to understand the technical “Moving Parts” of a modern, automated department.

Skills you will gain

- High-Velocity Delivery: Master the art of building CI/CD pipelines that can handle hundreds of deployments a day without breaking.

- Elastic Infrastructure: Learn to use Kubernetes to manage thousands of containers, ensuring your application stays online even during massive traffic spikes.

- Declarative Provisioning: Use Terraform to “write” your data center as code, making it easy to replicate, version, and secure.

- Operational Observability: Learn how to use data and metrics to predict system failures before they occur, keeping the “business engine” running 24/7.

- Security-First Automation: Integrate security tools directly into the pipeline, ensuring that speed never comes at the cost of safety.

Real-world projects you should be able to do after this certification

- Automated Disaster Recovery: Build a system that can automatically detect a regional cloud outage and move the entire operation to a different part of the world in minutes.

- Cost-Efficient Scaling: Design an infrastructure that “breathes”—growing when the market is busy and shrinking when it’s quiet to save the company thousands in cloud costs.

- Self-Healing Clusters: Set up a Kubernetes environment that automatically detects “sick” application instances and replaces them with healthy ones instantly.

Preparation plan

7–14 Days Plan (The Expert Rally)

This is for the seasoned pro. Spend the first 4 days aligning your existing knowledge with the CDP framework. Spend the next 6 days focused purely on the “Cloud-Native” stack (Docker & Kubernetes). Use the final 4 days for mock exams to build your testing stamina and identify any lingering gaps in your knowledge.

30 Days Plan (The Professional Portfolio)

This is the “Golden Ratio” for most working professionals. Dedicate 90 minutes a day.

- Week 1: Master Git and CI/CD logic.

- Week 2: Deep-dive into Containerization (Docker).

- Week 3: Master Orchestration (Kubernetes).

- Week 4: Focus on Infrastructure as Code (Terraform) and the final assessment.

60 Days Plan (The Long-Term Investment)

If you are new to the field, take this path. The first 30 days must be 100% about the “Bedrock”: Linux and Scripting. Without these, the automation tools will never make sense. The second 30 days should be spent building “End-to-End” projects that connect every tool in the DevOps ecosystem.

Common mistakes to avoid

- Chasing Every New Tool: Don’t get distracted by “shiny” new tools. Master the core pillars (Git, Jenkins, K8s, Terraform) first.

- Ignoring the Culture: DevOps is as much about people as it is about code. If you don’t learn how to collaborate, the tools won’t save you.

- Skipping the Terminal: You cannot learn DevOps through a mouse. You must become a master of the command line.

Best next certification after this

- Same track: Advanced Kubernetes Operator.

- Cross-track: Certified DevSecOps Professional.

- Leadership: Engineering Management Professional.

Choose Your Learning Path

- DevOps: The “Blue Chip” path for those who want to be versatile and valuable across any industry.

- DevSecOps: The “High-Security” path for those who want to protect assets in finance or government sectors.

- SRE: The “Performance” path for those who love deep technical engineering and system reliability.

- AIOps: The “Innovation” path for those using AI to manage the complexity of modern cloud systems.

- DataOps: For those who want to automate the pipelines that feed the world’s data engines.

- FinOps: The “Economist” path for those who want to master the financial optimization of the cloud.

Role → Recommended Certifications Mapping

- Senior Developer: CDP + Kubernetes Certified Developer.

- Cloud Operations: CDP + Terraform Specialist + AWS/Azure Architect.

- SRE: CDP + SRE Practitioner + Advanced Monitoring.

- Security Engineer: DevSecOps Professional + Cloud Security Expert.

- Tech Lead/Manager: Certified DevOps Leader + Engineering Management Pro.

Your Growth Roadmap: The Power of Compounding Skills

The CDP is just the beginning. To truly maximize your career ROI, you should follow a “Stacked” learning strategy:

- Foundation: Earn the CDP.

- Specialization: Master Advanced Kubernetes Operations.

- Security: Earn the Certified DevSecOps Engineer title.

- Leadership: Earn the Engineering Management Professional to move into the C-suite of technology.

Institutions Supporting Your Professional Journey

DevOpsSchool

The premier institution for practical, hands-on DevOps education. They focus on turning students into “Engineers” who can handle the pressures of a real production environment. Their global community and lifetime support make them a top-tier choice for any professional.

Cotocus

Excellent for those looking to specialize in Platform Engineering. They provide high-end training for teams that want to build their own internal developer platforms.

ScmGalaxy

A massive resource hub for anyone looking to stay current with the latest open-source automation tools and collaborative engineering practices.

devsecopsschool.com

The definitive destination for those who want to make security a core part of their automation strategy.

aiopsschool.com

Focused on the future of “Autonomous IT.” This is where you learn how to use AI to manage systems that are too large for human oversight.

Essential FAQs for the Career Investor

General FAQs

- Is the CDP exam harder than a standard cloud cert?

Answer: It is more practical. It tests your ability to solve engineering problems, not just your memory. - Do I need to be a developer to pass?

Answer: No, but you should be comfortable with the logic of automation and basic scripting. - What is the salary growth after CDP?

Answer: Professionals often see a 30% to 50% increase in their market value after becoming certified. - Is it recognized globally?

Answer: Yes, it follows the standards used in Silicon Valley, London, and Bangalore. - How much study time is needed?

Answer: For most, 60 days of consistent, 1-hour study is the perfect balance. - Will this help me get a remote job?

Answer: Absolutely. Managing cloud infrastructure is one of the most remote-friendly roles in the world. - Is there a focus on cost-saving?

Answer: Yes, the CDP teaches you how to build efficient systems that don’t waste cloud budget. - What if I have no IT experience?

Answer: Follow the 60-day Mastery plan. It starts with the absolute basics of Linux. - Can I take the exam from home?

Answer: Yes, the exam is online-proctored for your convenience. - Does it cover AWS? Answer:

It teaches principles that work on AWS, Azure, GCP, and even on-premise servers. - Is there placement assistance?

Answer: Yes, institutions like DevOpsSchool provide interview kits and career coaching. - Is it worth the money?

Answer: Given the salary jump, the certification usually pays for itself in the first two months of a new role.

CDP Specific FAQs

13. Is the exam lab-based?

Answer: Yes, it includes scenarios where you must diagnose and solve infrastructure issues.

14. What are the core tools?

Answer: Git, Jenkins, Docker, Kubernetes, and Terraform are the “Big Four” covered.

15. Does it cover microservices?

Answer: Yes, microservices deployment is a major part of the Docker/K8s sections.

16. Will I learn about security?

Answer: Yes, basic DevSecOps principles are integrated into the CDP track.

17. Is there mentorship?

Answer: Yes, you get access to expert mentors who can help you when you get stuck in a lab.

18. Can I transition from testing to DevOps?

Answer: Yes, QA professionals make some of the best DevOps engineers.

19. What is the pass rate?

Answer: For those who complete the labs, the pass rate is consistently over 95%.

20. Can I skip CDP and go to SRE?

Answer: Not recommended. CDP provides the automation “engine” that SRE is built upon.

New Voices from the Industry

Arvind K.

“I viewed my CDP as an investment in a ‘Blue Chip’ stock. The returns have been incredible. I moved from a support role to a Senior DevOps role with a 45% hike in six months.”

Sneha P.

“The mentorship at DevOpsSchool was the difference-maker. They didn’t just teach me how to run a command; they taught me how to think like a Cloud Architect.”

Rahul M.

“I used to spend my weekends manual-patching servers. After the CDP, I automated everything. Now I spend my weekends focusing on high-level strategy and my personal life.”

Tanvi S.

“The focus on Kubernetes and Terraform in the CDP was exactly what recruiters were looking for. I had three job offers within two weeks of getting my certification.”

Vikas G.

“As a manager, the CDP helped me understand how to reduce our cloud bill and increase our release speed. It’s the best ROI I’ve ever seen for my team’s training budget.”

Conclusion

The Certified DevOps Professional (CDP) is the highest-performing asset in the modern technical market. It offers immediate gains in salary and authority, while providing long-term security against a changing economy. By mastering the art of the “Software Supply Chain,” you are ensuring that your career remains in a permanent “Bull Market.” Don’t just work in tech—invest in your ability to lead it.